Executive Summary

A quiet technical divide is reshaping artificial intelligence development. While headline-grabbing models like GPT-5 capture attention, a more fundamental shift is underway: AI tasks that can leverage reinforcement learning are advancing at dramatically different rates than those that cannot [4]. This "reinforcement gap" is determining which AI capabilities will lead the next wave of innovation—and which may stagnate despite significant investment. The implications extend beyond research labs. In 2025, AI startups are on track to capture more than 50% of all venture capital investment for the first time [5], while practical applications are already delivering measurable results: agricultural AI is cutting water usage by 30% [6], and AI-powered drug discovery is compressing years of antibiotic research into months [7]. Yet infrastructure failures—like South Korea's catastrophic cloud storage fire with no backups [2]—reveal how fragile these systems remain.

Key Developments

- Reinforcement Learning Gap: AI capabilities that integrate well with reinforcement learning are advancing significantly faster than those that don't, creating an uneven landscape of AI progress that could determine which applications succeed commercially [4]

- VC Investment Concentration: 2025 is projected to be the first year where AI startups capture over 50% of all venture capital funding, signaling a historic concentration of innovation resources in a single technology sector [5]

- MIT Lincoln Laboratory: The TX-GAIN supercomputer, optimized specifically for generative AI workloads, represents the most powerful AI computing infrastructure at any US university, targeting biodefense and materials discovery applications [9]

- California Regulation: SB 53 passed with bipartisan support, demonstrating that AI safety regulation and innovation can coexist—contrary to claims that regulation would hamper US competitiveness with China [1]

- OpenAI Hardware Struggles: OpenAI and Jony Ive's collaboration on a screen-less AI device is facing significant technical challenges, suggesting the hardware interface for AI remains an unsolved problem [3]

Technical Analysis

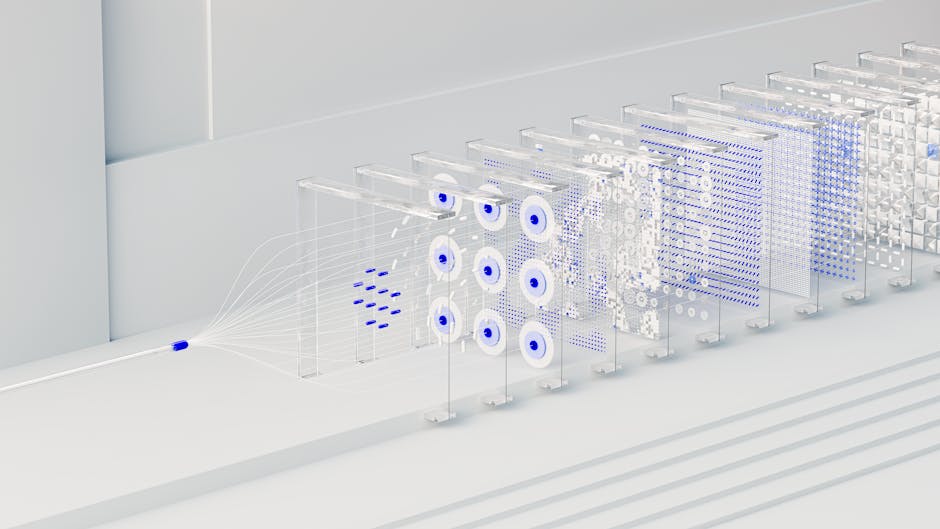

The reinforcement learning advantage stems from a fundamental asymmetry in how AI systems improve. Tasks with clear reward signals—like game playing, code optimization, or route planning—can iterate rapidly through trial and error [4]. Each attempt generates feedback that directly improves the model. By contrast, tasks requiring nuanced judgment, creative synthesis, or complex human preferences lack these clear signals, making improvement slower and more dependent on expensive human feedback.

This technical reality is already visible in deployment patterns. MIT researchers used generative AI to map how a narrow-spectrum antibiotic targets gut bacteria, compressing a process that typically takes years into a fraction of that time [7]. The key enabler wasn't just model scale—it was the ability to simulate molecular interactions and receive immediate feedback on predictions. Similarly, Instacrops' agricultural AI achieves 30% water reduction by optimizing irrigation through continuous sensor feedback loops [6].

Meanwhile, the infrastructure supporting these advances remains surprisingly fragile. South Korea's National Tax Service lost critical data when fire destroyed government cloud storage systems with no backup recovery options [2]. The incident underscores a broader pattern: organizations are deploying AI faster than they're building the operational resilience these systems require. Google's $4 billion investment in Arkansas data center infrastructure [8] reflects the massive capital expenditure needed to support AI workloads reliably.

Operational Impact

- For builders:

- Prioritize AI applications where reinforcement learning applies naturally—tasks with clear metrics, fast feedback loops, and quantifiable outcomes will see faster capability improvements than open-ended creative tasks [4]

- Build comprehensive backup and disaster recovery systems before deploying production AI infrastructure; the Korean government incident demonstrates that cloud providers alone don't guarantee data safety [2]

- Leverage specialized APIs like Google's Jules Tools for code generation rather than building from scratch—the command-line interface and API access lower integration barriers significantly [10]

- Consider narrow-spectrum applications where AI can demonstrate measurable impact (30% resource reduction, years-to-months compression) rather than broad general-purpose tools [6][7]

- For businesses:

- Expect continued VC concentration in AI to make non-AI fundraising significantly harder; position your company either as AI-enabled or prepare for a more challenging capital environment [5]

- Regulatory frameworks like California's SB 53 demonstrate that compliance and innovation can coexist; early adoption of safety practices may become a competitive advantage rather than a burden [1]

- Hardware interfaces for AI remain unsolved—OpenAI and Jony Ive's struggles suggest screen-less AI devices aren't imminent, so plan for traditional form factors in the near term [3]

- Regional AI infrastructure investments (MIT's TX-GAIN, Google's Arkansas facility) signal geographic clustering of AI capabilities; proximity to these hubs may matter for talent and partnership access [8][9]

Looking Ahead

The reinforcement gap will likely widen before it narrows, creating a two-tier AI landscape where certain capabilities advance exponentially while others plateau. Organizations should audit their AI roadmaps to identify which applications benefit from reinforcement learning's advantages and which may require alternative approaches or longer timelines. The VC funding concentration suggests this divide will be reflected in startup success rates—companies building in reinforcement-friendly domains may find both technical progress and capital access easier. Meanwhile, the regulatory environment is stabilizing faster than expected, with California's bipartisan approach suggesting that reasonable safety frameworks won't significantly impede development. The real constraint may be infrastructure resilience: as AI systems become mission-critical, the gap between deployment speed and operational maturity represents a growing risk that the industry hasn't fully addressed.